Innovation for Advancing with Customers: Digital Systems & ServicesResearch & Development

Column : Trends in Generative AI and Research at Hitachi

- Toshihiro Kujirai, Masayoshi Mase

- Media Intelligent Processing Research Department, Advanced Artificial Intelligence Innovation Center, Research & Development Group, Hitachi, Ltd.

Generative AI Boom

Generative artificial intelligence (AI) is experiencing an unprecedented boom. The Midjourney* AI service launched in July 2022 is able to generate images from text prompts at a level of quality that clearly equals or betters what a human might produce. Controversially, a winning image in an art competition turned out to have been generated using this service.

The generative AI boom was set in motion by the ChatGPT chatbot launched in November 2022. The launch was a big hit, with its easy-to-use chat interface and the surprising number of uses to which it can be put attracting more than 100 million users in just two months.

Microsoft has a large investment in OpenAI, the company that developed ChatGPT, and offers services that include ChatGPT on Azure* and Generative Pretrained Transformer (GPT), the large language model on which ChatGPT is based. A steady stream of tools that extend the functions of ChatGPT have also been released. Competition is intensifying, with many companies developing their own large language models, including startups as well as the large US IT companies like Google, Amazon, and Meta.

This poses the question of what makes generative AI so different from its predecessors that it has attracted all this interest? One major difference is that past AI systems were designed to perform specific functions, such as identifying defective items from images of industrial products. They worked by collecting training data and then building and running the model. Generative AIs, on the other hand, were not designed with particular uses in mind.

While the GPT large language model at the heart of ChatGPT has a wide range of uses, including summarizing, translating, and correcting texts, it was not designed explicitly to perform these functions.

GPT is trained on a large amount of text data (documents). The way this training works is that it reads the text up to a certain point and then adjusts its internal parameters reiteratively until it can predict with high accuracy which word will come next. Although this is all it does, choosing an appropriate input prompt will cause it to perform functions such as summarizing, translating, or correcting. This is called emergence of functionalities.

The diverse uses of the AI, beyond what its developers ever envisaged, arise from its countless users trying out different things. One source of the energy behind this current boom in generative AI is that its users themselves keep coming up with novel capabilities that expand its possibilities.

Many companies are taking a collective bottom-up approach to finding uses for the technology, such as holding in-house competitions to come up with ideas for its use. Top-down initiatives, on the other hand, include the use of generative AI to resolve management challenges, such as making the sharing of information between departments more efficient, passing on expertise as the number of experienced staff continues to fall, and bringing software development work in house.

While an earlier AI boom took place in the early to mid-2010s as people sought ways to use AI techniques such as deep learning, technology-driven uses of AI in many cases got no further than the technology verification step at the proof-of-concept (PoC) stage.

Since the late 2010s, however, companies have been setting up organizations to drive digital transformation (DX), adopting an objective-driven approach that is based on the specific challenges they face. With this has come increasing success at taking AI beyond the PoC stage to deliver actual business results. Now, however, with ChatGPT and other generative AIs attracting a lot of attention, instances involving technology-driven PoC projects are once again on the rise with people asking what the technology can be used for. When it comes to putting generative AI to use, it will likely be important to pursue a dual strategy that includes the bottom-up approach described above as well as objective-driven top-down initiatives.

Opportunities and Risks Posed by Generative AI

Along with intensifying competition in the development of generative AI and the growth in its use across a wide variety of knowledge work, concerns are also being raised about its risks, which include copyright violations, privacy, AI ethics, and information leaks. Of particular note is the way in which large language models can present untruths as fact, a phenomenon known as “hallucination.” While choosing prompts wisely can reduce this tendency to some extent, the phenomenon is inherent in the mechanism of GPT, which works by choosing words on probabilistic criteria. This makes it difficult to eradicate entirely. It can also be thought of as the flip side of the high levels of creativity exhibited by large language models. Along with the risks that use of generative AI will violate rights or leak information, the trustworthiness of its output also needs to be treated with due caution.

The opportunities and risks presented by generative AI are a topic of vigorous debate in academia, with Stanford University publishing a detailed report(1) in 2021 that put forward the concept of a “foundation model” and considered possible uses and impacts on society as well as the technical aspects of the technology. The explosive growth in the use of ChatGPT and other generative AIs has also driven public debate. Countries around the world are establishing legal frameworks and guidelines to prevent overconfidence in the capabilities of generative AI, to avoid the violation of human rights, and to put measures in place against malicious use.

In Europe, the EU AI Act, the world’s first comprehensive regulation on AI, was proposed in April 2021(2) and is the subject of ongoing deliberation. The draft revision from the final-stage deliberations at the European Parliament in June 2023 included provisions requiring the developers of generative AIs that use foundation models to review safety, indicate when material is the output of an AI, and disclose copyrighted material used for training. Broad agreement on the act was reached in December 2023. In the USA, an executive order on the safety of AI was issued in October 2023 obliging companies developing foundation models to notify the government when they perform model training and to share the results of safety and security assessments with government. In Japan, the AI Strategy Council played a central role in the publishing of a strategy for AI development and use, including generative AI(3), with further work on preparing guidelines for business.

Progress is also being made on international harmonization. The leaders’ declaration of the G7 Hiroshima AI Process was adopted in October 2023(4). This contained guidelines for everyone involved in AI, from development to applications, and a code of conduct tailored specifically for AI developers. At the AI Safety Summit hosted by the UK, meanwhile, the Bletchley Declaration(5) in November 2023 as a voluntary initiative for AI safety by the EU and 28 nations, including the USA, China, Europe, and Japan.

International technical standards will likely play an important part in putting these measures into practice. International standards for AI have been developed by the ISO/IEC JTC 1/SC 42(6). The ISO/IEC 42001 standard for AI management systems has been developed along with a large number of standards on AI reliability.

Hitachi published AI ethics principles for its Social Innovation Business in February 2021 and the dedicated AI ethics team at the Lumada Data Science Laboratory (LDSL) has been heavily involved with in-house education and with putting risk assessment into practice. Hitachi prepared in-house usage guidelines in April 2023 to accompany the rapid uptake of generative AI. Along with being diligent about highlighting risks, it has established practices for incorporating appropriate safety management into infrastructure and operating under the advisement of specialists. The Generative AI Center was established in May 2023 to make use of generative AI in ways that also manage the risks.

Research Work

AI research at Hitachi got underway in the 1960s with work on media and intelligent processing involving images, speech, and language processing. This work found uses in product inspection systems, automated teller machines (ATMs) for overseas markets, and finger vein authentication. Natural language processing was among the earliest research topics and Hitachi continues to win top prizes at international competitions in this field. A debating AI was developed in 2013 that, when given a proposition, could generate text arguing for or against by extracting and collating information from a large volume of documents, considering the question in terms of economics, the environment, politics, and so on(7). Research on generative AI at Hitachi draws on this past work.

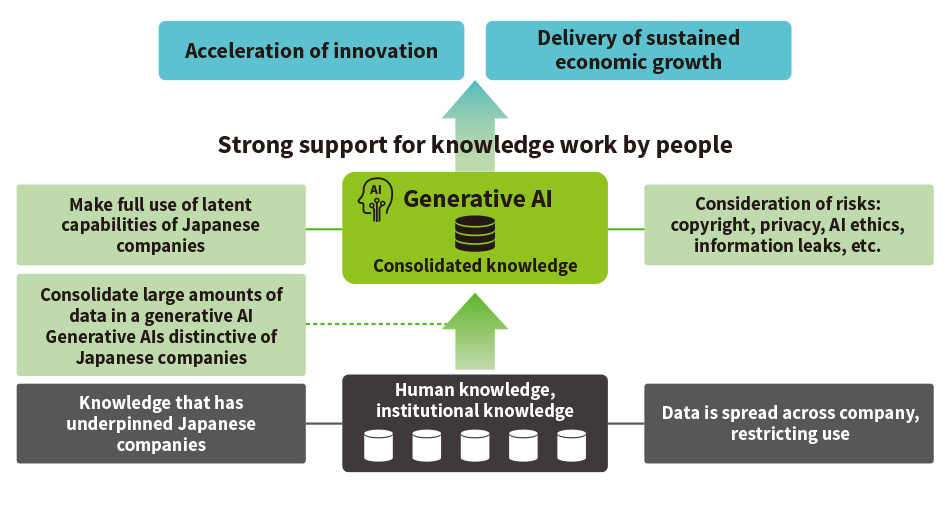

Japanese companies hold institutional knowledge and the knowledge of experienced staff that underpins their strength. However, as this knowledge is scattered over many different locations and may never have been documented, considerable scope yet remains for putting it to better use. Hitachi believes that generative AI can serve as a tool for collating and interpreting this knowledge.

By making full use of the knowledge that companies possess as a latent capability to provide strong support for knowledge work undertaken by people, without ignoring copyright and privacy issues, this will allow them to focus on more creative work. Generative AI has a part to play in every area, from extracting knowledge to collating it and putting it to use. Nevertheless, it is insufficient on its own and Hitachi believes it will need to be combined with natural language processing techniques built up through past work.

By drawing on knowledge acquired from work on operational technology (OT), IT, and products and a capacity for research into highly reliable AI honed over 60 years of effort, Hitachi will accelerate innovation and deliver sustained economic growth.

REFERENCES

- 1)

- Bommasani et. al, “On the Opportunities and Risks of Foundation Models” (Aug. 2021)

- 2)

- European Commission, “Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL LAYING DOWN HARMONISED RULES ON ARTIFICIAL INTELLIGENCE (ARTIFICIAL INTELLIGENCE ACT) AND AMENDING CERTAIN UNION LEGISLATIVE ACTS” (Apr. 2021)

- 3)

- AI Strategy Council, “Skeleton Draft of the New AI Business Operator Guidelines,” documents from 5th council meeting, (Sept. 2023)(in Japanese)

- 4)

- “G7 Leaders' Statement on the Hiroshima AI Process” (Oct. 2023)

- 5)

- “The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023” (Nov. 2023)

- 6)

- ISO/IEC JTC1/SC42: Artificial Intelligence

- 7)

- “Creating a Society in which Both People and AI are Smarter: Progress on Debating AI,” (Feb. 2019)(in Japanese)

1. Self-Service Platform for Industrial Computer Vision with Domain Knowledge Integration

Video analytics is widely used for industrial applications such as inspection. However, most of them are point solutions as there is no systematic process for the customer to inform the machine learning engineer about domain context of the video data and provide feedback about the machine learning model performance. To address this, Hitachi developed a self-service video analytics platform that scales across deployments with minimum system integration. This platform implements a closed loop cycle for cloud-based model training and lifecycle management and edge orchestration based on AWS*. Hitachi uses generative AI to seamlessly explore long video files to identify regions of interest and label images with domain context using natural language description. Hitachi’s platform has been deployed in Hitachi Astemo to enable multiple applications from maintenance, quality and operations and has proven cost reduction and faster solution deployment. This platform is also used to orchestrate all video analytics use cases that Hitachi Digital Services will deliver for Hitachi Rail WMATA plant.

(Hitachi America Ltd.)

[01]Overview of self service platform for industrial video analytics![[01]Overview of self service platform for industrial video analytics](/rev/archive/2024/r2024_01/16/image/fig_01.png)

2. Development of App Using Behavior Change Design to Encourage Self-directed Work Behaviors by Hitachi Staff

Work reporting and the submission of daily reports form part of workers’ daily routines. With working styles becoming more diverse, it is important for workplace management that these tasks be done properly. The problem, however, is that there is no encouragement for diligence if the only follow-up that employees receive is uniform and undifferentiated as this will make it difficult for them to develop a sense of personal engagement.

In response, to ensure that work reporting happens on a daily basis, Hitachi has developed a popup app in which reminders play a key role. The design of the app draws on knowledge of behavior change in the form of the transtheoretical model (TTM) and cognitive bias. It works by determining employee needs based on the characteristics of reporting, such as its frequency and timing, which were obtained from interviews and questionnaires. Hitachi has demonstrated that, by adjusting the displayed content and frequency of display based on these requirements, the app encourages self-directed work behaviors by staff.

The app has been rolled out for use by about 12,000 staff at Hitachi, Ltd. Further testing is being done to extend its use to other types of work.

[02]App-based initiative designed using knowledge of behavior change![[02]App-based initiative designed using knowledge of behavior change](/rev/archive/2024/r2024_01/16/image/fig_02.png)

3. Use of Personal Authentication Service Based on Individual Number Card

While the increased uptake of Japan’s My Number card (individual number card) has given impetus to wider use of the Japanese Public Key Infrastructure (JPKI) service for identity verification across both the public and private sectors, services from both sectors that use the individual number card are developed separately for each use case. This raises issues such as investment inefficiency due to the duplication of development work and difficulties in getting services to work smoothly with one another.

To overcome these challenges, Japan’s Digital Agency has been investigating ways of giving users reliable, secure, and convenient online access to public- and private-sector services by supplying a personal authentication application that uses JPKI as a trust anchor and provides functions such as identity federation. In response, Hitachi has been developing technologies for purposes such as: (1) cardless authentication using a smartphone during normal use, with reliable identity verification guaranteed by JPKI, (2) identity federation for unified access to a wide range of public- and private-sector services, (3) secure management of personal ID information with the ID Wallet service and its use when using services, and (4) implementation of a remote signature service for the digital signatures that are used online for user agreement and to indicate consent. The goal is to provide an environment for safe and secure digital transformation (DX) with a guarantee of trust.

[03]Use of personal Authentication service based on individual number card![[03]Use of personal Authentication service based on individual number card](/rev/archive/2024/r2024_01/16/image/fig_03.png)

4. Security Techniques for PSIRTs that Protect Customer Businesses

The rise in cyberattacks on Internet of Things (IoT) products such as Internet-connected vehicles and medical devices calls for the suppliers of these products to address the vulnerabilities that make such attacks possible. This has led to an increasing number of IoT product vendors establishing product security incident response teams (PSIRT) that centralize the security risk management associated with product vulnerabilities.

To provide support services for PSIRTs, Hitachi has developed techniques to assist with the collation of information on vulnerabilities and with assessing the level of threat that they pose to products. For information collation, Hitachi has developed a suite of techniques that address the problems of dealing with the large amount of vulnerability information that is published and the increasing amount of work needed to sort through it, reducing workloads while also preventing relevant information from being overlooked. These include techniques for identifying information topics, prioritizing the order in which to address them, and checking for false negatives. For threat assessment, Hitachi has addressed the difficulty of interpreting vulnerability information in the absence of a software bill of materials (SBOM) that provides detailed product management information. This involves extracting functional and structural elements from a product model to produce a virtual SBOM that structures this information hierarchically, thereby enabling the extent of the threat posed by a vulnerability to be estimated even when the SBOM is incomplete.

By supplying these techniques to PSIRTs, Hitachi is helping to improve customer business continuity through a faster and more efficient response to vulnerabilities.

[04]Security techniques for PSIRTs that protect customer businesses![[04]Security techniques for PSIRTs that protect customer businesses](/rev/archive/2024/r2024_01/16/image/fig_04.png)

5. Decentralized Security Operation

While cyberattacks in recent years have included orchestrated attacks that extend across multiple industries, the lack of information and security response capabilities at individual organizations have caused problems, resulting in slow responses to such attacks and increased costs. To address this, Hitachi has been working to establish a decentralized security operation in which organizations help each other.

Although few in number, security specialists are scattered across different organizations. This initiative has involved the development of techniques for detecting and responding to attacks that use functions for automating security operations and doing so in a coordinated manner, also allowing security specialists to share their knowledge with one another. Specifically, knowledge of detection and response measures is automatically generated from incident response records and other records (information that is difficult for other organizations to use because it exists as unstructured data) and made available in machine-readable form. Actions are executed automatically, thereby enabling the organizations to respond to a wide range of cyberattacks. The goal is for these techniques to reduce the risk of ever-evolving cyberattacks and cut the cost of responding.

In the future, Hitachi intends to contribute to the building of safe and secure infrastructure by commercializing the technology and expanding the number of participating organizations.

6. Generative AI assistant for troubleshooting in Integrated Operations Management JP1

Troubleshooting in IT systems requires technical knowledge and skills such as checking and investigating system status, configuration, error messages, and dealing with them.

To reduce the load on IT system operations and management, Hitachi has developed a prototype of a generative AI assistant for troubleshooting.

The assistant works with Integrated Operations Management JP1. It generates answers that suit the state of the IT system and its failures by completing information such as operational information of the IT system that is not included in the user question.

In addition, the assistant obtains technical knowledge through retrieving information from the JP1 manuals and summarizing the information by the generative AI. This technology can shorten the time for IT system recovery.

It also reduces the load of checking the system status and error messages with the JP1 manuals in urgent situations when a failure occurs and realizes safe and secure IT system operation.

7. Data Compression Service Featuring Deep Learning to Address Increasing Storage Costs

If large volumes of data are to be used to create a data-driven society, it will be important to reduce the cost of data storage. In response, Hitachi has been studying techniques for the use of deep learning in data compression and how to offer it as a service.

One example relates to the digitalization of infrastructure maintenance and concerns the increasing cost of storing the video data needed for analysis. To address this problem, Hitachi has developed a technique that works by having the user specify types of objects. It then controls image quality and data volume based on object type recognition, achieving high data compression while maintaining high image quality for the regions in an image that show objects of the specified types. By offering the technique as a service and bundling it with data storage, the amount of data to be stored can be reduced. In this example, doing so can cut the cost of storing large amounts of video data while maintaining high image quality in the image regions that show equipment in need of maintenance and achieving high compression in other regions.

Hitachi intends to continue researching the technology with a view to its practical application, anticipating its use for data storage in the digitalization of a variety of different activities across the public sector, finance, transportation, manufacturing, and other industries.

[07]Potential application of data compression service in infrastructure maintenance![[07]Potential application of data compression service in infrastructure maintenance](/rev/archive/2024/r2024_01/16/image/fig_07.png)

8. Improving Accuracy of HDD Failure Prediction in Storage Applications

Hitachi provides Hard Disk Drive (HDD) failure prediction in storage and high-availability IT systems with the hybrid cloud solution "EverFlex from Hitachi". To further improve IT system availability, Hitachi has now developed a technique that uses machine learning to automatically generate detection conditions that can improve the accuracy of HDD failure prediction and enable earlier detection.

The technology uses machine learning to analyze error outputs from HDDs before they fail and from HDDs that are operating correctly to identify the behaviors immediately before the failure. This can be used to auto-generate the conditions to detect HDDs that may fail before that occurs. This technology automatically generates detection conditions that enable more accurate and early detection of HDD failures from the information accumulated through storage operations and improves HDD failure prediction continuously.

9. Grid-aware IT Workload Control Technology

While significant growth is anticipated in renewable energy generation as part of the transition to carbon neutrality, the challenges include control of renewable energy output based on constraints on the electricity grid and the rising cost to society of augmenting the electricity infrastructure. Electricity demand from data centers (DCs) is also anticipated to rise significantly with the spread of AI and other applications. As it is more economical to pay the communication costs for IT workloads or processing data than it is to transmit electric power, it makes sense to use locally generated renewable energy by locating DCs in areas with a large amount of renewable generation and running IT workloads at regional DCs.

Through collaborative creation with TEPCO Power Grid, Inc., Hitachi is undertaking research and development aimed at resolving challenges through the control of electricity demand from widely distributed DCs. This work has developed a technology for optimizing electricity demand across different areas, something that was difficult to achieve using past distributed energy resources (DER). This is done by controlling when and where data center IT workloads are executed. The work has also demonstrated that the technology can balance electricity supply and demand across multiple areas by connecting to remotely located DCs and responding to balancing requests by shifting where IT workloads are run, when they run at a particular DC, and through the control of air conditioning and other facilities at the DC.

In the future, Hitachi intends to deepen its collaboration with DC operators and interoperation with other DERs with a view to the practical deployment of this technology.

[09]Trialing of supply and demand balancing across multiple areas through control of IT workloads![[09]Trialing of supply and demand balancing across multiple areas through control of IT workloads](/rev/archive/2024/r2024_01/16/image/fig_09.png)

10. Low-Code Service Mashup Platform

Hitachi has developed technology for service mashup platforms that enables the rapid development of application systems using low-code techniques. For its service mashup platform, Hitachi has adopted modular development methodologies for system development and is seeking to achieve composable development that works by linking service’s existing asset components together.

The service mashup platform is equipped with a pipeline engine. By using it as a means for reusing and linking software-as-a-service (SaaS), micro-services, and business tasks or other service asset components, processes and data can be brought together with minimal coding to accomplish service tuning, business process automation, and so on. Another feature is a technique for maintaining data consistency across distributed systems that enables highly reliable transactions across different services. This gives the platform the ability to cope with complex applications that require consistency of data across multiple service components, such as allowing services to reserve resources or execute transactions with one another.

Use of the service mashup platform can accelerate development that involves co-creation with customers. It allows for a requirements definition process in which a realistic image of the system is provided on-the-spot to enable the parties involved to discuss matters without having to take their requests away with them. It also makes it easier to use development and operation practices in which the system is updated progressively through a repeated process of trial and verification to achieve customer success.

11. Technology for Self-sovereign Identity Platform to Support Web 3

Self-sovereign identity (SSI) is a new concept in ID management that gives users control over their own personal information and the associated credentials as well as the ability to choose when to disclose it. It is attracting attention as one of the technologies that will enable the achievement of Web 3.

SSI is also used in business-to-business (B2B) sectors such as finance, manufacturing, and healthcare. The figure shows an example of its use to certify completed transactions between companies. A difficulty that has faced small and medium-sized businesses in the past is that, when entering into a new trading arrangement, they have found it difficult to gain the trust of their trading partners because they are unable to provide proof of past transactions. This has posed an obstacle to winning contracts. The use of an SSI platform allows businesses to provide their new trading partners with proof-of-trade credentials issued to them by their existing partners while still restricting the extent of disclosure. As the new trading partners are able to verify the authenticity of the credentials, this makes the process of entering into a new trading arrangement more efficient.

Implementing this type of SSI requires the ability to verify data without relying on a designated third party. In practice, this means using a blockchain.

Hitachi has been researching and developing an SSI platform that uses the well-known Hyperledger* open-source software (OSS) for blockchain and is expediting commercialization by contributing to the technology as a premium member of the OSS development community.

[11]Use case for self-sovereign ID platform: certification of B2B transactions![[11]Use case for self-sovereign ID platform: certification of B2B transactions](/rev/archive/2024/r2024_01/16/image/fig_11.png)

12. AI Management Technique for Maintaining AI Quality during Operations

AI has been finding practical applications across a wide range of areas in recent years, including in mission-critical systems. With this has come the problem of “concept drift,” the tendency for the accuracy of an AI to diminish over its operating life due to changes in external circumstances.

To resolve this problem and maintain AI quality, Hitachi has developed an AI management technique that can detect concept drift early and then retrain the AI model efficiently using a selection of optimal data. While the use of AIs to solve regression problems is common in industry, there were no effective techniques for this application until now. Accordingly, Hitachi developed a new algorithm for streaming active learning based on the regression-via-classification technique, a method that is suitable for regression problems. Hitachi demonstrated the utility of the algorithm by showing that it could maintain high regression accuracy across multiple datasets even when only a limited amount of valid case data was available*.

A paper describing this work has been accepted by the 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing (IEEE ICASSP 2024), a leading conference in the field of signal processing.

- *

- S. Horiguchi, et al., “Streaming Active Learning for Regression Problems Using Regression via Classification,” arXiv:2309.0101 (2023).

13. Generative AI Suitable for Detecting Rare Events

Influenced by factors such as urbanization and climate change due to global warming, instances of major damage caused by natural disasters have been occurring in recent years not only in Japan, but also around the world. For rescue and recovery work to proceed accurately and efficiently in the event of a disaster, it is very helpful to be able to acquire a rapid overview of the damage by using AI for advanced recognition and situation assessment.

A disaster is a typical example of a type of event that, while infrequent, has a major impact when it does occur. This makes it difficult to collect data for training an AI on such events. Hitachi has addressed this problem by means of an image analysis technique that can detect rare events and that works by using generative AI and computer graphics to replicate rare events in a way that can be used as training data.

In the future, Hitachi intends to pursue social innovation through the use of the technique to support disaster response, engaging in collaborative creation with local government as well as other partners such as infrastructure maintenance and insurance companies. By doing so, it will contribute to creating a resilient society in which people can live safely and securely.

14. Expanding Applications of CMOS Annealing

Toward the realization of a sustainable society, there is a growing need to optimize the operations of social systems in consideration of people’s lifestyles and the environment. To address this need, Hitachi has developed CMOS (complementary metal-oxide semiconductor) annealing, which enables the rapid search for practical solutions of optimization problems, and is applying it to social systems.

One recent example is a collaboration with Aisin Corporation to reduce traffic congestion around manufacturing plants. When workers leave the plants at a specific time, traffic congestion occurs on roads where many of their vehicles pass. This suggested that the congestion could be reduced by optimizing the vehicles’ departure times to spread out the traffic on the surrounding roads. To test this idea, CMOS annealing was used to optimize the departure times while considering their leaving routes from the car parks in plants. By evaluating the optimized plan using a traffic simulation, it was confirmed that congestion could be eliminated and the vehicles’ total commuting time could be reduced to less than one-third of the original one.

Based on the knowledge gained from this project, Hitachi will continue efforts to improve the traffic conditions on roads around various facilities and to contribute an improvement of energy efficiency of the transportation system and reduction of CO2 emissions.

[14]Use of CMOS annealing to optimize departure time planning![[14]Use of CMOS annealing to optimize departure time planning](/rev/archive/2024/r2024_01/16/image/fig_14.png)

15. Social Infrastructure DX

Given the aging of social infrastructure, age deterioration in equipment is likely to be more common in the future. Experienced maintenance workers are also aging and in short supply, posing problems for the use of on-site visual inspections to uphold operation and maintenance quality for social infrastructure. In response, Hitachi operates a maintenance platform business for social infrastructure that supports more efficient maintenance by using digital technologies to remotely monitor equipment and to make information available.

Recognizing that the operation of this business comes with an increasing need for information platforms that enable customers to manage their social infrastructure in digital space and for the integrated management of equipment that is operated by different organizations, Hitachi has developed digital twins for social infrastructure that provide unified management of both above-ground and below-ground equipment. It has also developed a technique for aligning locations in drawings that can be used on equipment ledgers and drawings managed by different organizations.

Through the use of these digital twins for infrastructure, Hitachi intends to step up its contribution to making social infrastructure maintenance planning and maintenance work more efficient.

16. Highly Efficient Materials Search Using Chemicals Informatics

Hitachi has developed Chemicals Informatics (CI)* as a means of searching for materials that works by using AI to analyze published data from patents and research papers. CI was used to search for a material for use in a temperature measurement device that could act as an adhesion layer between a thin film of platinum (Pt) and its glass substrate (SiO2), thereby preventing the Pt film from peeling. The search identified titanium (Ti) as a material that has a higher peel strength than both Pt and SiO2.

To verify that Ti could fulfill this purpose, molecular simulation was used to calculate the peel energy and a scratch test was performed to measure the critical scratch load for peeling. In both cases, the results indicated that Ti had a higher peel strength. It was also found that the mechanism responsible for this high peel strength was that the atoms at the Pt-Ti interface are arranged in an orderly pattern, a consequence of Ti and Pt having interatomic spacings that differ by only about 6% (0.296 nm and 0.278 nm respectively). When this knowledge was utilized to fabricate an actual device with a Ti adhesion layer, it was found that Pt peeling did not occur. This work demonstrated the utility of CI for materials search. Moreover, the entire process from search to experimental testing took only about two months, significantly shortening the material design time compared to when CI is not used (estimated at about three years). The intention is to utilize the technology in a wide variety of materials search and design applications aimed at achieving carbon neutrality.

- *

- Name of software and service from Hitachi High-Tech Corporation

[16]Simulation and experimental results showing results of CI search for additives![[16]Simulation and experimental results showing results of CI search for additives](/rev/archive/2024/r2024_01/16/image/fig_16.png)

17. Development of Company Trust Assessment Technique

The environment in which supply chains operate has become more complex over recent years, driven by factors such as parts and materials shortages resulting from the pandemic or political tensions and the selection of partners who take “environment, social, governance” (ESG) issues seriously. To adapt to these societal changes, companies need to build robust supply chains.

In response, Hitachi has developed a solution that supports sourcing by manufacturers by providing centralized management of supplier information and by assessing supplier trustworthiness. The solution presents the links between the companies in a supply chain visually and uses ordering data and externally sourced information to score companies on the combined criteria of environment, social, governance, finance, quality, cost, and delivery (ESGFQCD). This enables manufacturers to choose the right suppliers and build robust supply chains.

In the future, Hitachi intends to continue developing technologies for making supply chains more efficient and to contribute to the building of supply chains that can adapt to diverse societal changes.

18. Calculation of Carbon Footprints through Supply Chain Cooperation

The accurate assessment of CO2 emissions and reduction efforts are both essential to the decarbonization of entire industrial supply chains as part of the transition to carbon neutrality in 2050. While it is a standard practice to calculate the CO2 emissions of purchased inputs (Scope 3 Category 1 emissions) by multiplying the value or quantity of the inputs by the industry-average figure for per-unit emissions, the problem with this is that it does not recognize any reduction efforts made by the companies concerned. The Green × Digital Consortium of the Japan Electronics and Information Technology Industries Association (JEITA) has formulated technical specifications for data exchange and a visualization framework that provide common data formats and interconnection methods based on the Partnership for Carbon Transparency (PACT) Pathfinder framework of the World Business Council for Sustainable Development (WBCSD). A trial to demonstrate the utility of these ran from September 2022 to June 2023. Hitachi participated in the trial as a solution provider and user. This included developing a common gateway for linking to existing environmental solutions such as EcoAssist-Pro/LCA and to solutions from other vendors, and demonstrating the utility of the technical specifications and the calculation methods for CO2 emissions and that they can be used to determine supply chain CO2 emissions accurately and efficiently. In the future, Hitachi intends to contribute to the decarbonization of the global environment by promoting adoption by a wider range of corporate users.

[18]Calculation of carbon footprints through supply chain links![[18]Calculation of carbon footprints through supply chain links](/rev/archive/2024/r2024_01/16/image/fig_18.png)

19. Technology for using open-source CPUs that realizes low-cost, energy-efficient control systems

RISC-V* is an open standard for central processing units (CPUs) that offers a high level of cost-performance. When utilizing RISC-V to implement control systems, software porting between different types of CPUs becomes a challenge. A technology to ease software porting to RISC-V has been developed that contributes to speeding up new product development and coping with semiconductor end-of-life (EOL).

Migrating software between different types of CPUs has required major modifications to accommodate the differences in peripheral functions such as bit ordering of data and memory management. In this research, software reusability has been improved by extracting the CPU-dependent parts from several software implementation examples and systematizing the implementation in accordance with its usage. In order to reduce cost by consolidating multiple devices into one, a hypervisor (an environment for virtual CPU execution) has been utilized to make the consolidation easier, which realizes a significant reduction of the amount of the modifications required when porting existing software by several thousands of lines of code.

Hitachi plans to utilize the technology in onboard and wayside control equipment for railways, automotive electronic control units (ECUs), and industrial controllers.

This technology is derived from the project JPNP16007 commissioned by the New Energy and Industrial Technology Development Organization (NEDO).

20. Environmentally conscious production solutions that improve factory productivity and reduce environmental load

Rising environmental awareness over recent years, including about the achievement of carbon neutrality, means that the manufacturing industry, in addition to its existing goal of improving productivity, also has an increasing need to reduce its environmental load such as power consumption. Production improvements, combining productivity improvement with environmental load reduction, call for identifying the factors behind equipment and operating conditions that are detrimental to these goals and for devising production improvements that will effectively address these factors.

To achieve this, Hitachi has developed a simulation technique for making detailed predictions about productivity and environmental load. The core of the simulation is a technique for modeling power consumption under specific equipment operating conditions that is based on the results of analyzing time-series data on power consumption and other aspects of equipment operation. This can be used to assess the benefits of different production improvements in terms of key performance indicators (KPIs) that relate to productivity and environmental load. Such improvements might include the optimization of production planning, production line reconfiguration, or design changes.

In the future, Hitachi intends to use this technique to supply environmentally conscious production solutions that both improve productivity and reduce environmental load.

[20]Optimization of productivity and environmental KPIs using power consumption model![[20]Optimization of productivity and environmental KPIs using power consumption model](/rev/archive/2024/r2024_01/16/image/fig_20.png)

21. ZEL: Real-time AI Control Technique for Industrial Plants

The declining birthrate, aging population, and shrinking workforce are prompting interest from the field of plant operation in using AI as an alternative to experienced operators. Using AI to automate plant control calls for the timeliness to cope with rapidly changing control targets, the ability to express visually how operational actions were determined, and a high level of explainability. While past practice in AI control has been to use a simulator in an iterative calculation, this takes a long time to converge on a solution. The zero-episode learning technique that has been developed by Hitachi achieves both timeliness and explainability by replacing this with a mathematically equivalent statistical model that is computed concurrently.

An experimental trial was conducted in which the method was used to control steam temperature in an incinerator generator over a period of approximately three months (90 consecutive days, excluding incinerator downtime). This demonstrated that the method was able to cope with daily changes in the target control value due to the variable quality of the waste being incinerated and to keep the steam temperature closer to the control target. Following this successful long-term operation, Hitachi released its RL-Prophet AI-equipped plant control system in 2023.

![[05]Decentralized security operation](/rev/archive/2024/r2024_01/16/image/fig_05.png)

![[06]Example of response triggered by fault notification event](/rev/archive/2024/r2024_01/16/image/fig_06.png)

![[08]Technique for improving accuracy of pre-emptive detection of HDD faults](/rev/archive/2024/r2024_01/16/image/fig_08.png)

![[10]Service mashup platform](/rev/archive/2024/r2024_01/16/image/fig_10.png)

![[12]AI management technique](/rev/archive/2024/r2024_01/16/image/fig_12.png)

![[13]Use of generative AI to create disaster images](/rev/archive/2024/r2024_01/16/image/fig_13.png)

![[15]Overview of digital twins for infrastructure](/rev/archive/2024/r2024_01/16/image/fig_15.png)

![[17]Overview of supply chain issues and their solutions](/rev/archive/2024/r2024_01/16/image/fig_17.png)

![[19]Facilitation of software porting](/rev/archive/2024/r2024_01/16/image/fig_19.png)

![[21]Comparison of reinforcement learning and ZEL](/rev/archive/2024/r2024_01/16/image/fig_21.png)